Maria Chojnowska

12 May 2023, 10 min read

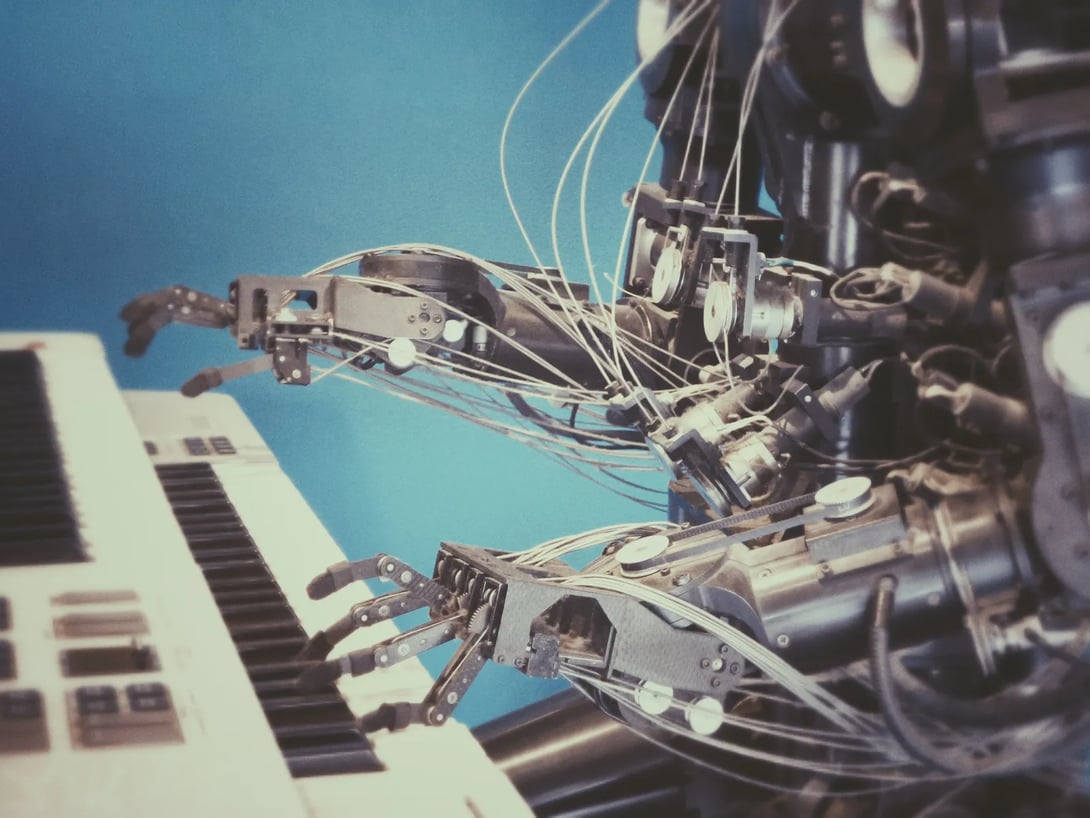

Machine Learning (ML) is a subset of Artificial Intelligence (AI) that uses statistical algorithms and models to improve computer systems' performance on a specific task by learning from data without being explicitly programmed.