Maria Chojnowska

16 November 2023, 7 min read

What's inside

- Watch the Full Presentation

- Defining the Problem

- Data Science Workflow

- What Can Go Wrong?

- What Do We Mean by Data Quality?

- Data Quality Process

- Can Python Help Us?

- At First Glance

- In Greater Detail

- So, What to Choose?

- Let’s Solve Our Problem

- Key Takeaways

- Join the Conversation

The quality of data is paramount. There are no doubts about that. With companies collecting and processing vast amounts of data, the responsibility to ensure this data is accurate, consistent, and relevant has never been greater.

At the recent PyWaw #106 meeting, we delved into a comprehensive presentation highlighting the importance of data quality, the challenges involved, and the available tools to address these challenges.

This article is an extract from the presentation, providing insights into data quality. Feel free to share your thoughts or questions at the end of this read.

Watch the Full Presentation

To give you a deeper insight into our discussion on data quality, I have included the full video recording of the presentation from the recent PyWaw #106 meeting. This presentation not only sets the stage for our exploration of this article but also offers valuable perspectives and expert knowledge on the subject. I highly encourage you to watch it to enhance your understanding of the complexities and nuances of data quality management.

The insights gathered from this presentation will be further elaborated in the sections below, where we delve into specific aspects of data quality, its challenges, and the solutions offered by Python tools.

Defining the Problem

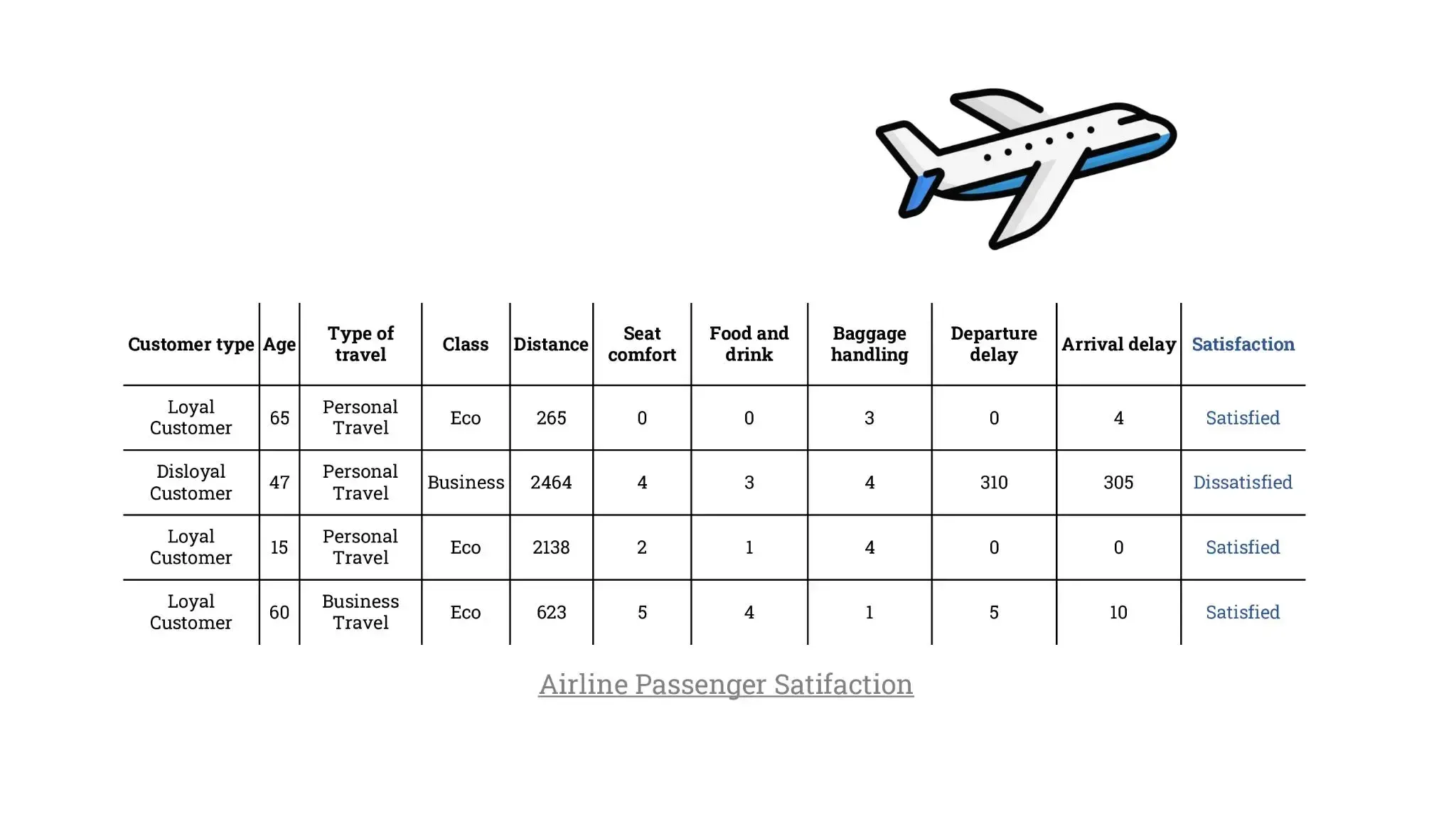

Data quality problems often start from the very inception of a data science project. For instance, consider a dataset detailing "Airline Passenger Satisfaction". The data columns like Customer type, Age, Type of travel, Distance traveled, and Satisfaction are crucial.

Each piece of data collected must be accurate to make correct business decisions and predict future trends.

Data Science Workflow

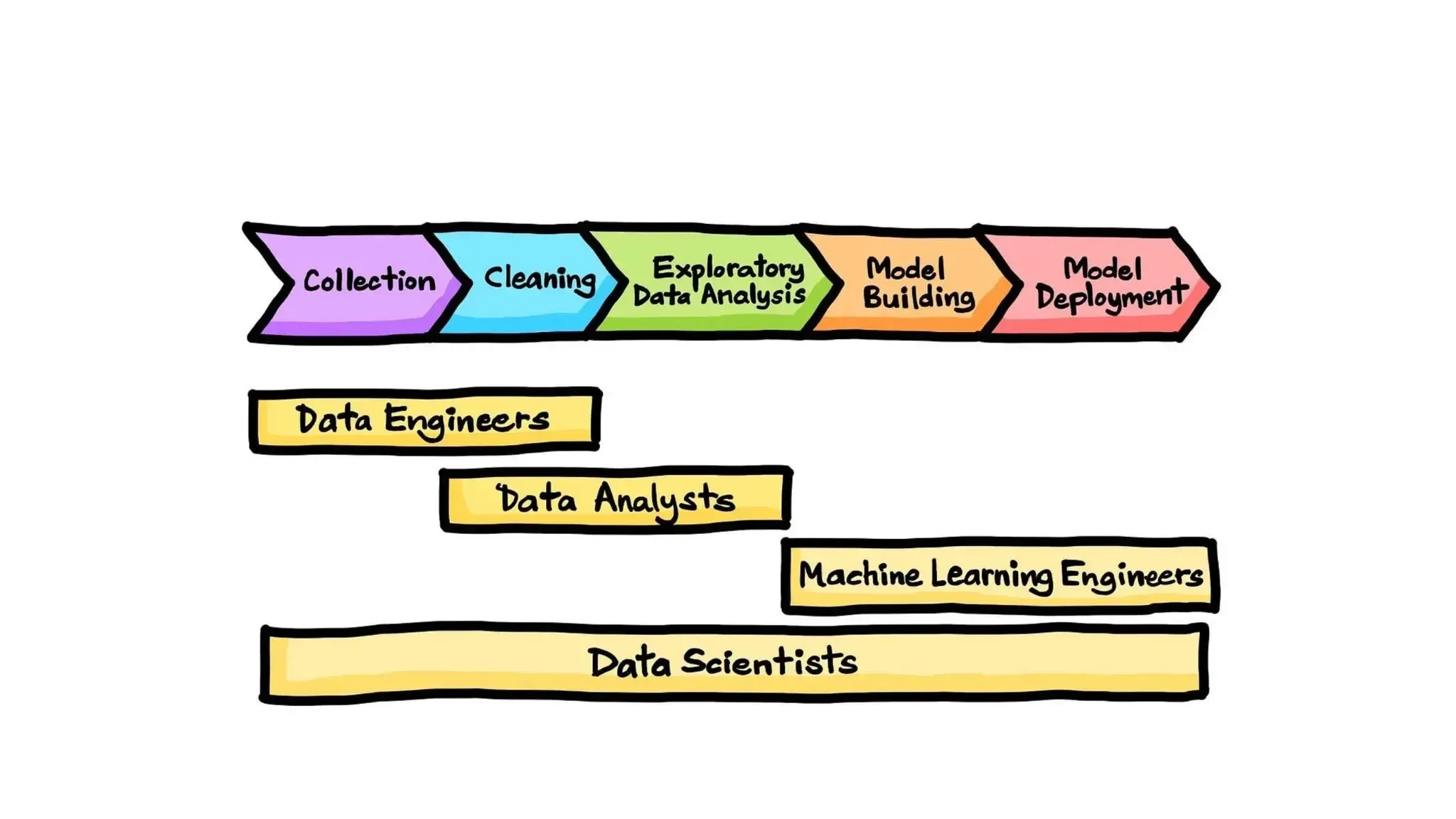

The project's lifecycle encompasses several stages:

- Collection: Gathering relevant data.

- Cleaning: Removing inconsistencies and inaccuracies.

- Exploratory Data Analysis: Understanding patterns and insights.

- Model Building: Using algorithms to predict or classify data.

- Model Deployment: Making the model available for practical use.

The success of these stages depends on the collaboration of different roles like Data Engineers, Data Analysts, Machine Learning Engineers, and Data Scientists.

What Can Go Wrong?

Like any intricate process, many pitfalls can compromise a data science project's integrity:

- Insufficient Data: Not having enough data can lead to overfitting or poorly trained models.

- Bad Data Leading to a Bad Model: Garbage in, garbage out. Poor-quality data will invariably lead to poor predictions or insights.

- Algorithm and Model Missteps: Choosing the wrong algorithm, incorrect hyperparameter tuning, or deployment errors can affect the results.

- Evaluation Issues: Using inappropriate metrics can falsely measure a model's performance.

- Requirement Collection: Misunderstood or poorly defined requirements can direct the entire project down an incorrect path.

What Do We Mean by Data Quality?

The term 'Data Quality' involves several key criteria:

- Completeness: All required data should be present.

- Consistency: There shouldn't be discrepancies in data across the dataset.

- Accuracy: Data should co represent real-world scenarios.

- Timeliness: Data should be relevant to the current timeframe.

- Validity: Conforming to set patterns or formats.

- Uniqueness: Duplicate entries should be absent.

Data Quality Process

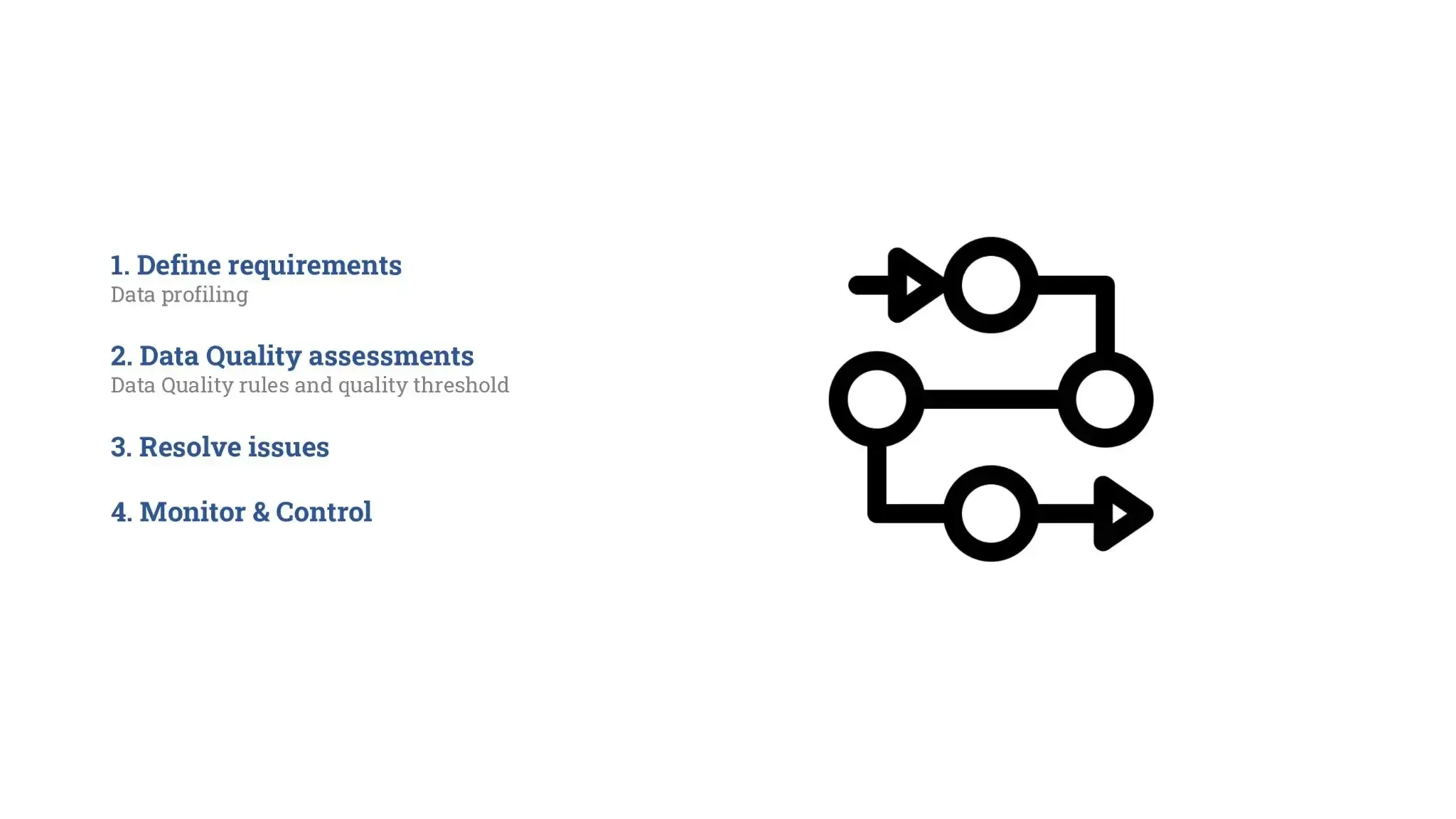

Data quality is essential in ensuring that information and insights derived from data are reliable. Let’s outline a structured approach to maintaining and enhancing data quality:

Define Requirements

Data profiling is the first step. This involves collecting statistics and information about the data, such as the range, average, and variance of numeric data or the frequency of distinct values for textual data. It allows organizations to understand the nature and structure of their data.

Data Quality Assessments

Setting Data Quality rules and quality thresholds is crucial. This involves determining what constitutes good-quality data and setting boundaries or thresholds. For example, any data entry outside these parameters might be flagged for review.

Resolve Issues

After the assessment, the next step is addressing the issues found. This can range from minor corrections to extensive data-cleaning exercises, depending on the severity and nature of the issues identified.

Monitor & Control

Continuous monitoring and control are crucial. This ensures that the data remains of high quality over time. Regular audits, automated checks, and feedback loops can be established to promptly detect and rectify deviations from the set quality standards.

Can Python Help Us?

Python, with its vast ecosystem of libraries and tools, is instrumental in the data quality process. Some of the notable libraries include:

- great_expectations: It's a powerful tool for data validation, profiling, and documentation. It helps in setting and monitoring the data quality expectations.

- pydeequ: Inspired by the Deequ library from Amazon, pydeequ assists in defining 'unit tests for data'. It's especially potent for large datasets.

- pandera: While 'pandas' is instrumental in data manipulation, pandera complements it by providing a flexible and robust data validation toolkit.

Leveraging these tools can significantly streamline the data quality process, making it more efficient and reliable.

At First Glance

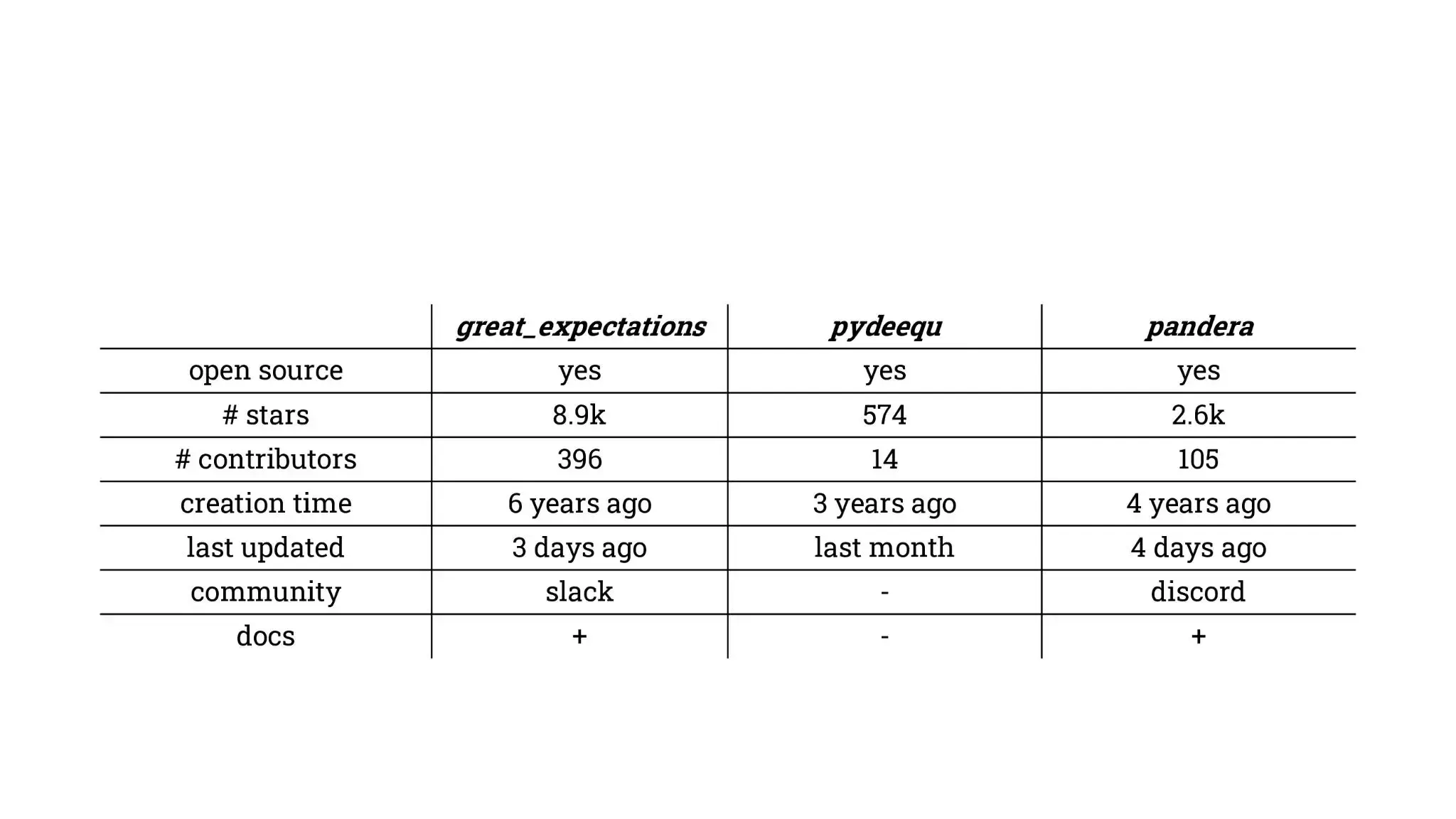

- 'great_expectations' is the most popular, with 8.9k stars and 396 contributors. It has been around for six years, recently updated, and has a community on Slack with available documentation.

- 'pydeequ' is the least popular, with 574 stars and 14 contributors. Created three years ago, its latest update was around a month ago. No specific community platform is mentioned, and the documentation is not indicated.

- 'pandera' is in the middle in terms of popularity, with 2.6k stars and 105 contributors. It's been around for four years. The community is on Discord, and documentation is available.

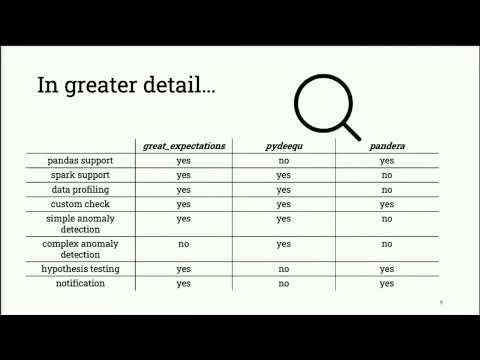

In Greater Detail

- 'great_expectations' supports both pandas and spark. It offers data profiling, custom check capabilities, simple anomaly detection, hypothesis testing, and notifications. However, it does not support complex anomaly detection.

- 'pydeequ' lacks support for pandas but supports spark. It offers data profiling, custom checks, and simple and complex anomaly detections but does not support hypothesis testing or notifications.

- 'pandera' supports pandas but not spark. It offers custom checks, hypothesis testing, and notifications, but not data profiling simple or complex anomaly detections.

In summary:

- If you're looking for a library with a broader community and extensive features, 'great_expectations' seems the most robust.

- For specific spark support and complex anomaly detection, 'pydeequ' is the choice.

- If you mainly use pandas and need a middle-ground solution, 'pandera' is worth considering.

So, What to Choose?

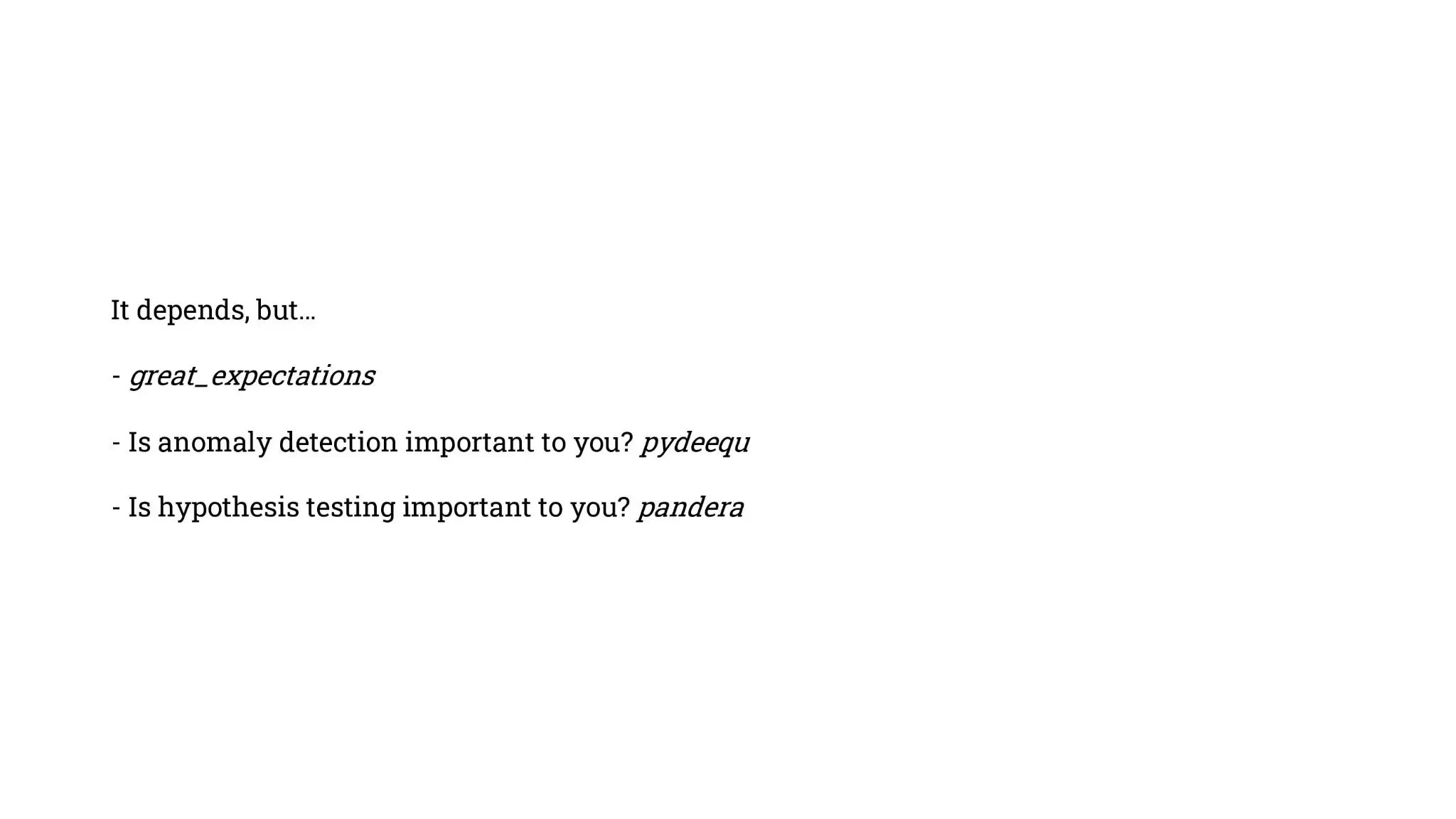

- The decision on what tool to opt for hinges on individual needs and the specific requirements of a project.

- If one is keen on having an all-encompassing tool for data validation with diverse validation capabilities, then 'great_expectations' is recommended.

- For those who prioritize anomaly detection in their datasets, 'pydeequ' is the suggested tool.

- If the emphasis is on hypothesis testing, 'pandera' becomes the go-to choice.

Let’s Solve Our Problem

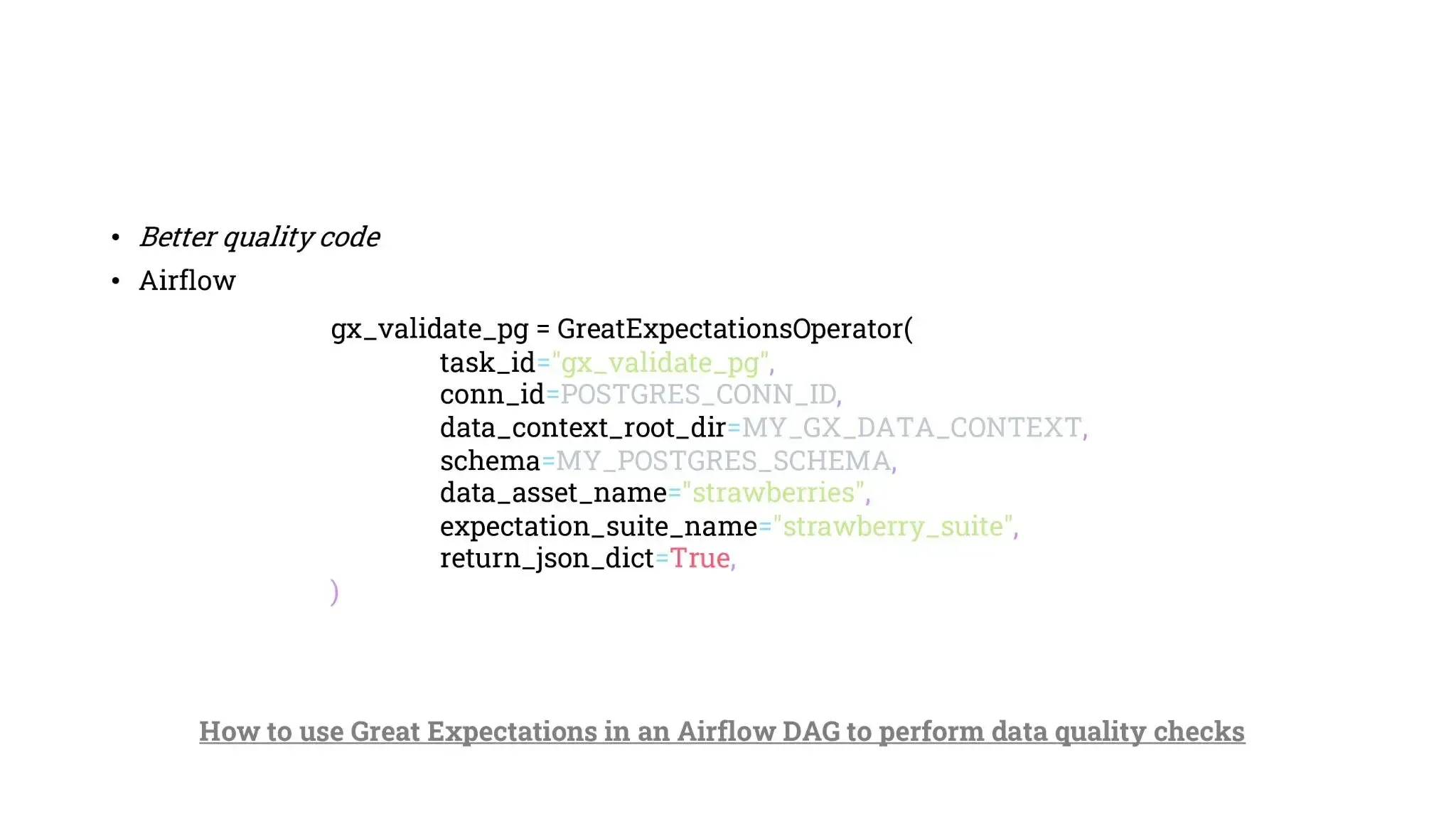

- Data Quality with Great Expectations in Airflow

Tools like Apache Airflow facilitate the orchestration of complex data workflows, ensuring the integrity and accuracy of the data being processed remains a challenge.

'GreatExpectationsOperator' is a powerful tool that directly integrates the data validation framework "Great Expectations" directly into Airflow.

- Prioritizing Better Quality Code

Dirty data, inaccurate analytics, or even minor errors in data processing can lead to significant operational inefficiencies, incorrect business decisions, and revenue losses. As such, prioritizing code quality in our data pipelines is not just an engineering best practice but a business imperative.

- Harnessing the Power irflow

Apache Airflow has rapidly become popular as the de facto tool for orchestrating complex data workflows. Its dynamic pipeline creation, combined with its powerful UI and extensive community support, makes it an attractive option for data engineers across the globe.

- Integration with Great Expectations

While Airflow orchestrates, 'Great Expectations' validates. This Python-based tool allows users to define "expectations" for their datasets. These are assertions about data properties that must hold true for the pipeline to succeed. For instance, one might expect that every entry in a "sales" column should be a positive integer.

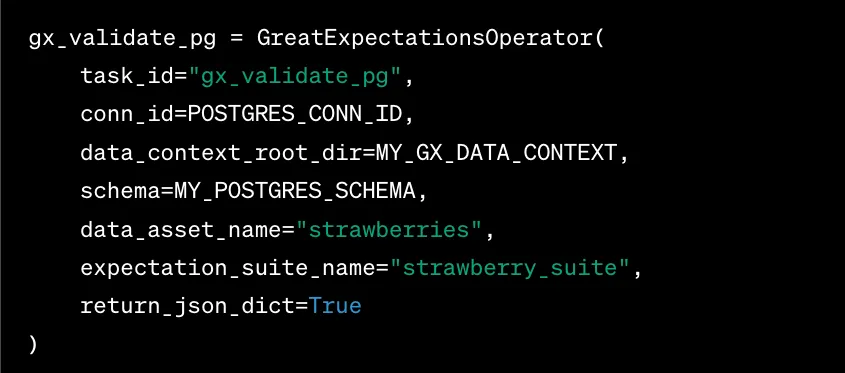

A specific implementation can look something like this:

In this code snippet, the 'GreatExpectationsOperator' is set up to validate data in a PostgreSQL database with the schema "MY_POSTGRES_SCHEMA" and a specific data asset named "strawberries". The expectations for this validation are defined in the "strawberry_suite".

Key Takeaways

Importance of Data Quality: Data quality is critical to making informed business decisions, predicting future trends, and ensuring the overall success of data science projects.

Challenges in Data Science: Various pitfalls can compromise the integrity of a data science project, such as insufficient data, poor data quality, and algorithmic missteps.

Criteria for Data Quality: Data quality is assessed based on completeness, consistency, accuracy, timeliness, validity, and uniqueness.

Data Quality Process: A structured approach to data quality includes defining requirements, assessing data quality, resolving identified issues, and continuous monitoring and control.

Python Libraries for Data Quality: The content introduces three Python libraries:

- 'great_expectations',

- 'pydeequ',

- 'pandera',

detailing their features, community support, and use cases.

Integration of Tools: The combination of 'Great Expectations' with Apache Airflow enhances data quality validation in data workflows, ensuring the processed information's accuracy and reliability.

Join the Conversation

For more insights and collaboration opportunities, join the PyWaw community and visit the PyWaw website

Upcoming Event: PyWaw Meeting #107 on Monday, November 27, 2023, at 6:30 PM.