Łucja Włosek

23 September 2022, 7 min read

What's inside

- Flaky Tests - What Are They?

- Impact on the Testing Process

- How to Detect Flakiness?

- Tips to Avoid Flaky Tests

- Re-running Tests

- Summary

Cypress is one of the most popular JavaScript frameworks for testing software effectively. Its most valuable perks are simplicity, a snapshot visualization tool, and automatically reloading after any code change. However, as much as testers and developers worldwide enjoy writing automated tests using Cypress, some difficulties must be overcome. But before we focus on their reasons, let's look into the definition of flaky tests.

Flaky Tests - What Are They?

A test is considered flaky when it gives you inconsistent outcomes across different runs, even when you’ve made no changes to your test code. You know that you have a flaky test when it passes when run individually, but it fails on a subsequent run.

Impact on the Testing Process

Flaky tests has a massive impact on progress in the project. The most critical areas are:

Time

When we have random fails and passes in our tests, we need URLs to investigate what happened, which needs time. They can pass when we run them locally but fail on CI (Continuous Integration) tools. In this case, rerunning tests could require rebuilding the app to check if tests are unstable or if we found a bug in the app. Apart from identifying the issue, we must fix or try to prevent it, which adds costs.

Mistrust

A large number of failed tests means that we may have bugs, but some tests failed only this time. Naturally, this leads to indifference to "red" results, and we start saying things like, "Yeah, those are probably flaky tests". However, a real bug may stay undiscovered when the results look the same as in flaky tests. Moreover, some issues might not even appear in the results when the test fails before they occur.

How to Detect Flakiness?

Identifying the reason for your flaky tests is tricky as the results are random. So firstly, we must eliminate the possibility that there's something wrong with our tests.

- Make sure your tests are independent. To check that, you need to run your test suite in a different order every time. If the same tests fail due to a change of order, it might just mean they depend on the actions or results of other ones, or the test lacks data cleanup.

- Be careful with the assumptions in your tests. There are countless examples: an element id or other locator is unique, an order of elements in the table is always the same, waiting time is less than set cy.wait(n), and even time zones might be a problem.

- It could be an actual bug in the product. Debug your code carefully to look for examples like elements that didn't appear or disappeared too soon.

When you're sure none of these cases apply to your condition, you can move on to fixing your tests to be flake-free.

Tips to Avoid Flaky Tests

One of the most frequent causes of flaky test results in error: cy.find() failed because the element has been detached from the DOM.

What does that mean?

Cypress runs tests so fast that the app may not keep up with loading elements in time. Cypress will retry the last command, but it’s not enough. Let’s find out how automatic and manual retries can help solve this issue.

Merge Commands

Firstly we need to understand how Cypress retries commands and assertions. Cypress retries commands like .get() and .find() if you make an assertion on them that fails. In retrying, Cypress re-queries the DOM until it finds the element or times out. However, Cypress will only retry the command before the failed assertion in the chain. So, if you have a chain of commands with an assertion like:

cy.get("[some-element]").find("[the-child-element]").assert("has", "something")

and the assertion fails, Cypress will only retry the .find() command instead of starting from the beginning of the chain and retrying .get().

To prevent this from creating flakiness when waiting for elements to render, you need to assert on each command that you want Cypress to retry. One way to fix this is by combining .get() and .find() into a single command and then adding an assertion. This way, the .get() command will retry if the assertion fails.

Our new command and assertion will look like this:

cy.get("[some-element the-child-element]").assert("has", "something")

More assertions

The DOM tree will sometimes reload between the execution of cy.get() and the .click() command - the test and application get into a race condition leading to the "detached element" error. Cypress automatically retries the last command, which in this case is .click(), but the element it found with cy.get() is no longer under the address. To prevent that, you can use assertions to ensure your element is in the state you need it to be in this step.

Let’s take a look at an example.

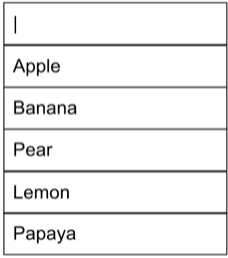

It's a search box with dynamic results. Firstly, you need to click on an input to show all the options. Then, when you type any character, it filters the possibilities and shows only ones with the searched value.

An example test could look like this:

// open the container

cy.get(‘#search-box’).click()

// type into the input element to trigger search

cy.get(‘#search-input’).type(‘a’)

// select a value

cy.contains('#results-list', 'Papaya').should('be.visible').click()

// confirm that value is selected

cy.get(#search-box').should('have.text', 'Papaya')

The test passes locally but occasionally fails when running on CI. Why? The screenshot Cypress generated after an error occurred shows “a” typed into the search box and four results which are correct behavior - it looks like the test failed for no reason. To investigate, we need to slow down the test.

Let’s use .pause() in the third step:

// open the container

cy.get(‘#search-box’).click().pause()

Now when we run the test, it stops right after clicking on the search box. What happens? Before the results load, a glimpse of an empty list is barely noticeable to a user. Request answered very fast, but it turns out Cypress is faster. We don’t check the results list now, but the next step filters it and checks if “Papaya” exists. The glimpse is here, too, so Cypress might be checking the unfiltered results. How to fix it?

Assertions are a great tool in this case. But, first, we should check if the list is loaded in the first place and then if it is filtered.

// open the container

cy.get(‘#search-box’).click()

// assertion - check length of our list

cy.get('#results-list').should('have.length', 5)

// type into the input element to trigger search

cy.get(‘#search-input’).type(‘a’)

// assertion - check length of filtered list

cy.get('#results-list').should('have.length', 4)

// select a value

cy.contains('#results-list', 'Papaya').should('be.visible').click()

// confirm that value is selected

cy.get(#search-box').should('have.text', 'Papaya')

Now that we are sure results are ready to be checked, the test passes even in slower environments. Whatever your scenario’s problem is, assertions should help, but be.visible chainer may not be enough. Even in this case, a list container was visible; only it was empty.

Re-running Tests

The single command retry-ability may not be enough to eliminate flaky tests. Sometimes the test fails because the backend server is slower than usual or temporarily unavailable. Cypress provides a simple way to re-run a failing test without writing more code. Here’s the first example, which will retry one particular test if it fails:

it('my flaky test', {retries: 2}, () => {

cy.visit('https://locahost:3000/')

// …and more test steps

}

)

Now your test will run up to 3 times. You can also specify the number of retries for different modes:

retries: {

runMode: 2,

openMode: 0

}

With this configuration change, all failing tests run with "cypress run" will be attempted a maximum of 3 times. If you want a more global approach to retries, you can set that up in your cypress.json file, so it applies to all tests in your project:

"retries": 2

or

"retries": {

"runMode": 2,

"openMode": 0

}

Summary

In conclusion, while Cypress offers a powerful and efficient platform for JavaScript testing, flaky tests can significantly impede project progress. Time-consuming investigations, mistrust in test results, and potential oversight of actual bugs are just a few challenges flaky tests present. To mitigate these issues, meticulous identification of flakiness causes and proactive measures are essential.

To ensure stable and reliable test outcomes, consider implementing strategies such as merging commands, introducing more assertions, and utilizing Cypress' retry mechanism. By addressing common issues like element detachment and race conditions, you can enhance the consistency of your test suite.

If you're seeking further guidance, Sunscrapers, your dedicated tech partner, is here to assist. Contact us to unlock the full potential of your testing processes. Let's collaborate to streamline your testing framework and propel your projects to success!