Michał Żelazkiewicz

9 January 2024, 6 min read

What's inside

I set out to test the Named Entity Recognition capabilities of the well established SpaCy against the brand new Large Language Models, such as OpenAI ChatGPT, Llama2, and Google Bard to check whether LLMs bring a new quality to that area.

For those of you who don’t know:

Natural Language Processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human (natural) languages. It involves making it possible for computers to read, understand, and generate human language.

Named Entity Recognition (NER) is a task in NLP that aims to identify and categorize specific entities in a text into predefined categories (like person names, organizations, locations, medical codes, time expressions, quantities, monetary values, and percentages.

About the Test

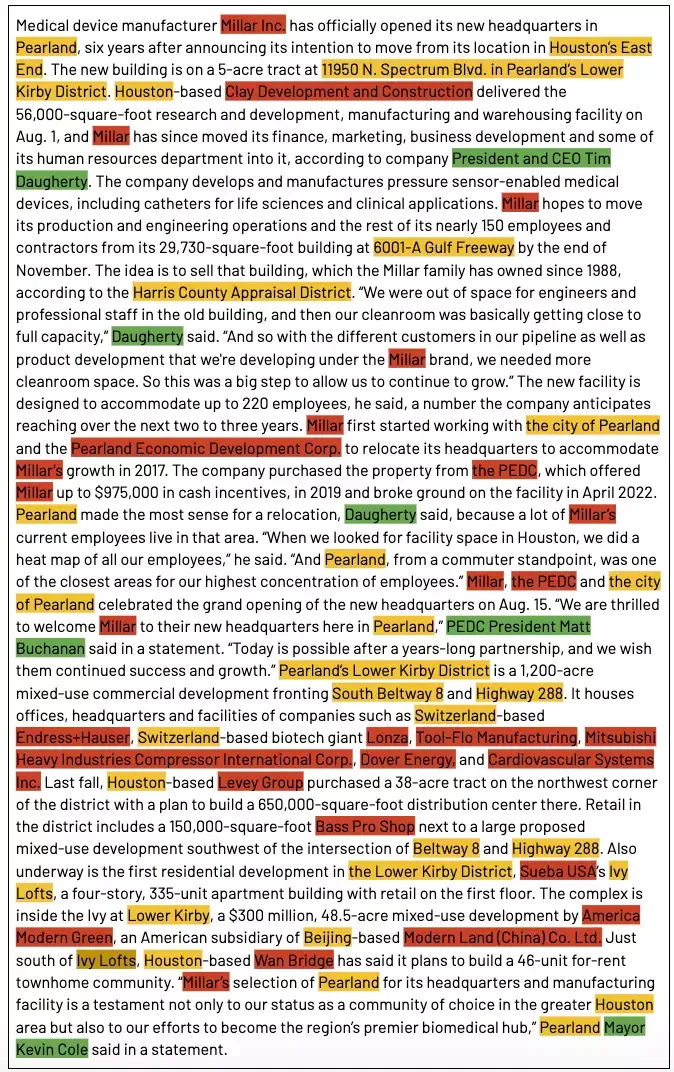

Due to my professional interests and experience, I’ve decided to check how well the selected tools would source named entities such as people names, organization names, and locations from a real estate news article. Even today, such tasks may still be performed by human moderators, therefore any new improvement in the area could bring serious efficiency gains for people and companies.

Real estate article constitutes a great basis for our test as it was packed with intricate names and details and was, in general, not trivial to interpret.

Here’s the preview of the selected article:

Here is access to the full text.

It’s also important to highlight specific difficulties that NLP tools will face in this test:

- People Names: Recognizing names, especially within a dense text, is foundational for any NLP tool. Moreover, distinguishing between first names, last names, and titles offers insights into the tool's depth of understanding. By selecting diverse name structures from the article, I aimed to see how effectively the tools could navigate these nuances.

- Organizations Names: These often come with their own challenges. Organizations can have names similar to common words or other entities. Their accurate recognition is vital for numerous applications, such as database management, market analysis, or sales strategy formation.

- Locations Names: The accuracy in detecting location names is pivotal, especially in sectors like real estate, logistics, or travel. Moreover, with location names ranging from city names to specific addresses, this criterion also tests the granularity of the NLP tool's detection capability.

Benchmark

For an effective comparison, I set forth a list of entities taken from the article, which should be recognized by the tested tools.

People Names:

- Tim Daugherty, President and CEO of Millar Inc.

- Matt Buchanan, President of Pearland Economic Development Corp.

- Kevin Cole, Mayor of Pearland

Organizations Names:

- Millar Inc.

- Clay Development and Construction

- Pearland Economic Development Corp. (PEDC)

- Endress+Hauser

- Lonza

- Tool-Flo Manufacturing

- Mitsubishi Heavy Industries Compressor International Corp.

- Dover Energy

- Cardiovascular Systems Inc.

- Leavy Group

- Bass Pro Shop

- Sueba USA

- America Modern Green

- Modern Land (China) Co. Ltd.

- Wan Bridge

Locations Names:

- Pearland

- Houston’s East End

- 11950 N. Spectrum Blvd.

- Lower Kirby District

- 6001-A Gulf Freeway

- Harris County Appraisal District

- South Beltway 8

- Highway 288

- Ivy Lofts

With the benchmarks in place, let’s look at how each of the tested tools performed in the test.

Test Results

Here are the results of our test: NLP Test Results

People Names

The analysis began with recognizing people's names from the article.

Results:

- All tools correctly discovered all three People's Names mentioned in the article and returned their names and surnames.

- SpaCy misclassified some of the Locations and Organization Names as People Names.

- All LLMs returned additional information about each person by default, which is impossible for SpaCy.

The best results in People Names category (name, surname, position, and company) were provided by Bard, Llama2, and GPT-4.

A bit worse, but still very accurate, was GPT-3.5 - it did not discover information about the company name of one person, which was separated in text. It shows that GPT-3.5 is a bit inferior in the context of full-text understanding compared to its competitors.

The last place belongs to SpaCy, which provided only basic information about people (just name and surname) and also mixed it with incorrect information.

Top performer: Bard, Llama2, and GPT-4.

Organization Names

Shifting the focus to organization names revealed some distinct capabilities and gaps.

Results:

- Misclassifications were common across the board, with different tools making different errors.

- Both GPT models showcased depth by not just identifying the organizations but also providing additional context.

- Llama2 lacked the deeper context that the GPT models offered.

- Bard and SpaCy had more pronounced misses in this category.

Here, the results were varied and showed different strengths and weaknesses of the analyzed models.

First, every model misclassified exactly one Location Name as an Organization Name. SpaCy and GPT models misclassified “Harris County Appraisal District”, and Llama2 and Bard misclassified “City of Pearland”.

Both GPT models returned the best result with one name missing (different for both), however, with bonus points for additional detailed information about listed organizations. The second place belongs to the Llama2 model with one missing name but without additional context. In third place, Bard and SpaCy landed with few missing organization names and no additional information.

Top performer: GPT-4, GPT-3.5

Locations

The assessment of location name recognition was crucial, given the context of the article.

Results:

- SpaCy's results were basic and its ability to distinguish nuanced location details was limited.

- Llama2 and Bard showed better accuracy in recognizing specific locations but did not offer extended context.

- GPT-4 outperformed others, delivering an almost flawless recognition and contextual understanding of locations.

Here, we can see three different approaches to the problem.

SpaCy provided only names of cities, countries, and areas without any additional context. Street names weren’t recognized at all. Parts of “___-based” phrases or company names were considered.

Llama2 and Bard, focused on locations themselves, without any additional information, but with the correct discovery of some of the streets.

The best results were provided by ChatGPT, which correctly returned nearly all locations with additional context. The only exception was the Harris County Appraisal District - earlier misclassified as an organization.

Top Performer: GPT-4.

Summary

The test results clearly show the gap between the capabilities of SpaCy and selected LLMs and the differences in their understanding of an article as a whole.

The Final Verdict

Let’s summarize the scores in the table below:

| SpaCy | Llama2 | GPT-3.5 | GPT-4 | Bard | |

|---|---|---|---|---|---|

| People | 1 | 3 | 2 | 3 | 3 |

| Organizations | 1 | 2 | 3 | 3 | 1 |

| Locations | 1 | 2 | 3 | 3 | 2 |

| Sum | 3 | 7 | 8 | 9 | 6 |

The final ranking is as follows:

- GPT-4 - 9 points

- GPT-3.5 - 8 points

- Llama2 - 7 points

- Bard - 6 points

- SpaCy - 3 points

Conclusion

We have confirmed a significant gap between the accuracy of SpaCy and modern LLMs. LLMs consider not only the direct surroundings of each word but also the context of the whole article. It makes them more accurate and helps them better understand and recognize Named Entities.

This difference causes SpaCy to return only the exact contents of the article without any additional information. In the case of LLMs - especially GPT-3.5 and GPT-4 - there was much more data in the answer, which helped us understand the results.

This quick test shows that LLMs are far more advanced and accurate in tasks related to information extraction, can find indirect relations between different pieces of data, and generate more accurate and precise responses. However, they are not yet perfect, and we need to take into account that we cannot fully trust the results, hence an element of human moderation is still recommended.