Patryk Młynarek

21 February 2024, 15 min read

What's inside

- Introduction

- HTMX Performance vs Standard approach

- Initial Load vs Lazy Load: Locust testing

- E2E testing with Playwright:

- Boosting Links and Forms with HTMX

- Enhancing FastAPI Security with Templates

- Summary

Introduction

In our previous article, we were getting to know FastAPI, HTMX, Jinja2, DaisyUI, and Tailwind CSS, discovering why this mix is so powerful. Now, let's dig even deeper to gain more knowledge, understanding, and familiarity with different approaches.

Our journey continues as we explore HTMX and FastAPI in more detail. We'll look closely at how they perform and how to make them more secure. This exploration is about helping you really understand how strong these technologies are when used together. Come along as we dig deep into these tools, uncovering clever tricks that make them even better.

Note: As in our previous exploration, we'll be utilizing a dedicated repository crafted specifically for this article. Feel free to download it and experiment firsthand with all the concepts discussed. Don't forget to utilize the specially crafted bootstrap script to generate fake data for testing purposes! For more information follow README.

HTMX Performance vs Standard approach

HTMX is known for its modern development style and fast performance. Now, let's take a closer look to see if that's really true. We'll discuss two ways of doing things: one using HTMX to reload only part of the page, and the other using the standard method where the whole page reloads.

We'll explore how each method looks in practice, helping you make an informed decision based not only on performance but also on the ease and appearance of implementation.

Note: Explore the capabilities yourself by testing it with the provided repository and navigating through the following pages:

The data used for testing consists of 1 million bootstrapped rows in the database, generated by the script (make bootstrap). This bootstrapped data mimics a realistic dataset, allowing us to assess the system's performance under substantial load.

First, let's attempt a standard approach to fully load the page and return all data at once. In the initial approach, loading the page can be a time-consuming process, taking around 1.246 seconds on my local machine during testing. This delay is primarily due to the combination of fetching the following components from the database inside the fun_fact_full_load endpoint:

- Fake Fun Facts rows

- Fake Fun Facts count

- Simulating waiting for external resources using asyncio.sleep(1)

Despite utilizing bootstrapped data, this method not only exhibits slowness but also provides a poor user experience. Users are left waiting until the entire page is fully loaded.

Now, let's delve into the second approach – partial load. This strategy divides the work across four endpoints, significantly enhancing the user experience. Initially, only the application's skeleton, the visual part, is called, leading to faster page rendering. During this first request load, visually appealing loaders indicate that data fetching is in progress. Meanwhile, in the background, three requests are made to fetch the necessary data. Once this data retrieval process is complete, the results are seamlessly displayed, demonstrating a significant enhancement in the user experience.

This method not only improves user experience but also enhances performance by reloading only the specific data needed.

Breaking down the partial load approach requests:

- First request: Completed almost immediately, taking only 0.003 seconds (3ms) for the user to see the entire page.

- When the user can see the page, it's time to initiate background requests:

- Get actual Fun Facts: 0.179 seconds (179ms)

- Get Fun Facts count for the header: 0.023 seconds (23ms)

- Simulating waiting for external resources: 1.010 seconds

In the results, we've displayed the most important data to the user within 0.182 seconds (3 ms + 179 ms), including the skeleton of the page and actual Fun Facts. The remaining content of the page can continue to load in the background while the user interacts with our website.

To measure these execution times, a simple script was employed, utilizing the measure_average_execution_time function. This function takes an endpoint URL and the number of requests as parameters, providing insights into the average execution time for the specified endpoint:

import time

from typing import List

import requests

def measure_average_execution_time(endpoint_url: str, num_requests: int) -> float:

"""

Measure the average execution time of making requests to the specified endpoint.

Parameters:

- endpoint_url (str): The URL of the endpoint to measure.

- num_requests (int): The number of requests to make for calculating the average execution time.

Returns:

- float: The average execution time in seconds.

"""

execution_times: List[float] = []

for _ in range(num_requests):

start_time = time.time()

requests.get(endpoint_url) # noqa

end_time = time.time()

execution_time: float = end_time - start_time

execution_times.append(execution_time)

print(f"Executed in: {execution_time}")

average_time: float = sum(execution_times) / num_requests

print(f"Average execution time: {average_time}")

return average_time

# url: str = "http://localhost:8000/fun-fact-full-load"

# url: str = "http://localhost:8000/fun-fact-partial-load"

# measure_average_execution_time(endpoint_url=url, num_requests=10)

Initial Load vs Lazy Load: Locust testing

Leveraging HTMX, we have the capability to effortlessly enhance our website, optimizing page loading speed and increasing interactivity. In order to assess and compare the effectiveness of the Initial Load and Lazy Load approaches, we turn to Locust—an open-source load testing tool.

Utilizing Locust is straightforward; we define user behavior through Python code, allowing us to simulate millions of simultaneous users swarming our system. The scripts used to generate the results presented below are conveniently included in our testing repository, providing you with the opportunity to conduct your own experiments.

Note: To run prepared performance tests use commands:

make perf-tests-fullmake perf-tests-partial

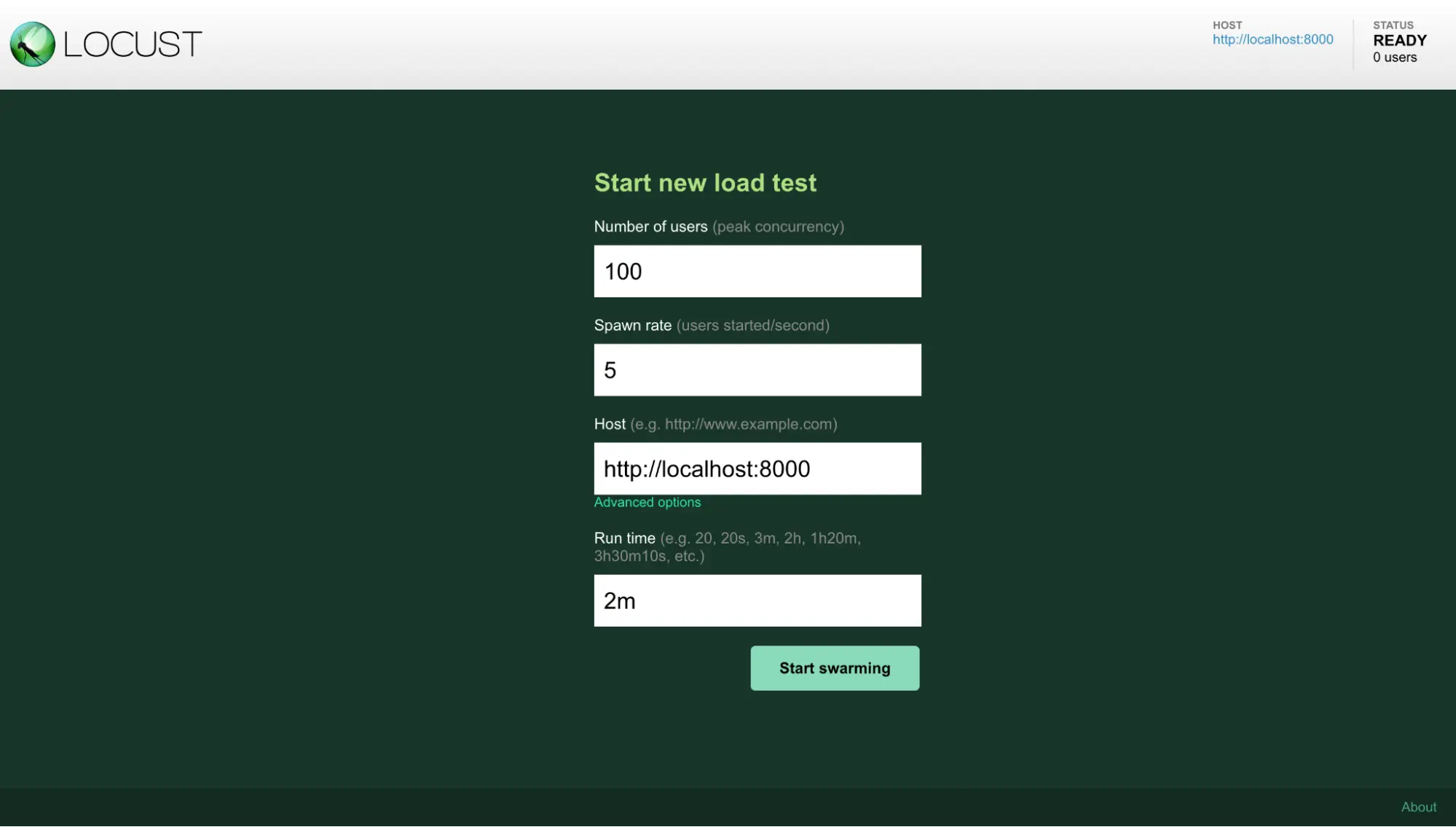

The tests are conducted in two different scenarios. In both cases, we simulate the activity of one hundred users interacting with our application.

In the initial load scenario, the setup is straightforward: one hundred users request our page every second (to be precise, between 0.8 and 1.2 seconds).

For the lazy or partial load scenario, we design the test to mimic real-world app usage. We assign weights to different components of the page load process: the skeleton, header, and footer requests each have a weight of one, while requests for actual data have a weight of one hundred. This weighting reflects the assumption that once the page is initially loaded, users will primarily seek new fun facts, with the header and footer content remaining static or changing infrequently.

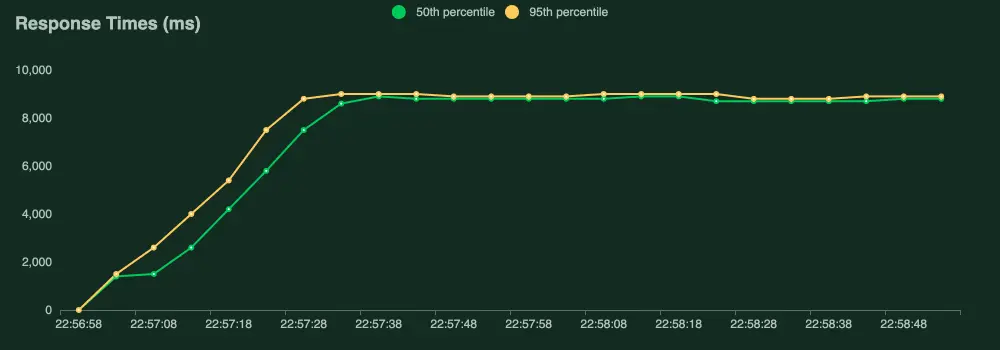

Initial Load

Used configuration:

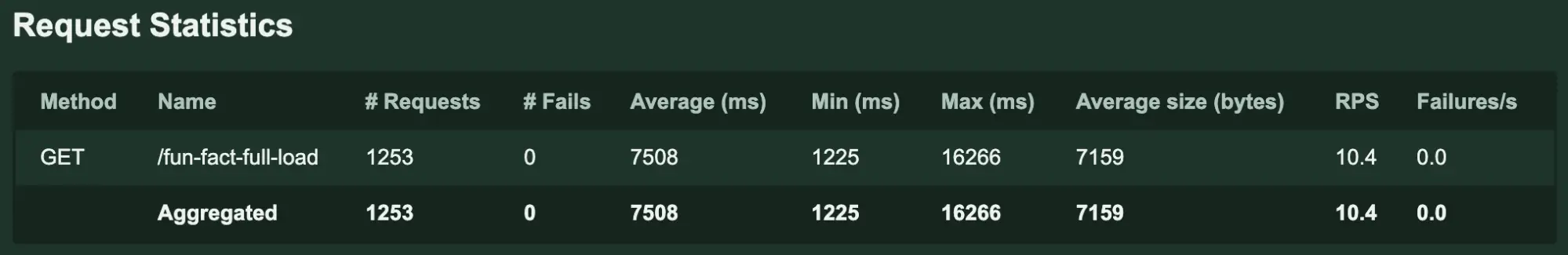

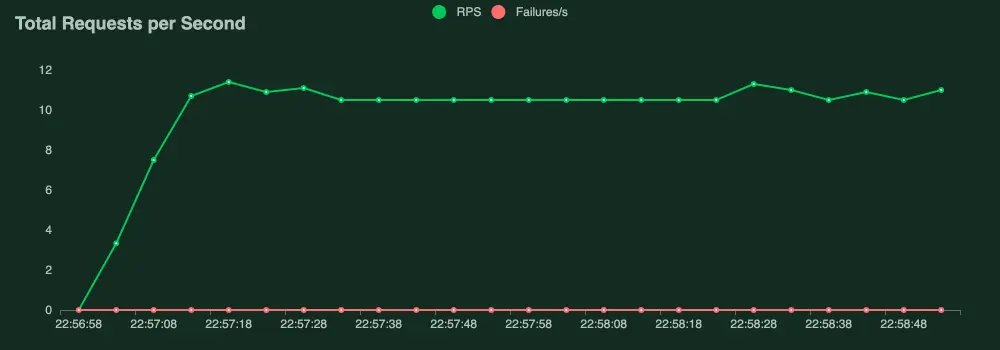

Results:

Performance test written using Locust:

from locust import HttpUser

from locust import between

from locust import task

class MyUser(HttpUser):

wait_time = between(0.8, 1.2) # Time between requests in seconds

@task

def fun_fact_full_load(self):

self.client.get("/fun-fact-full-load")

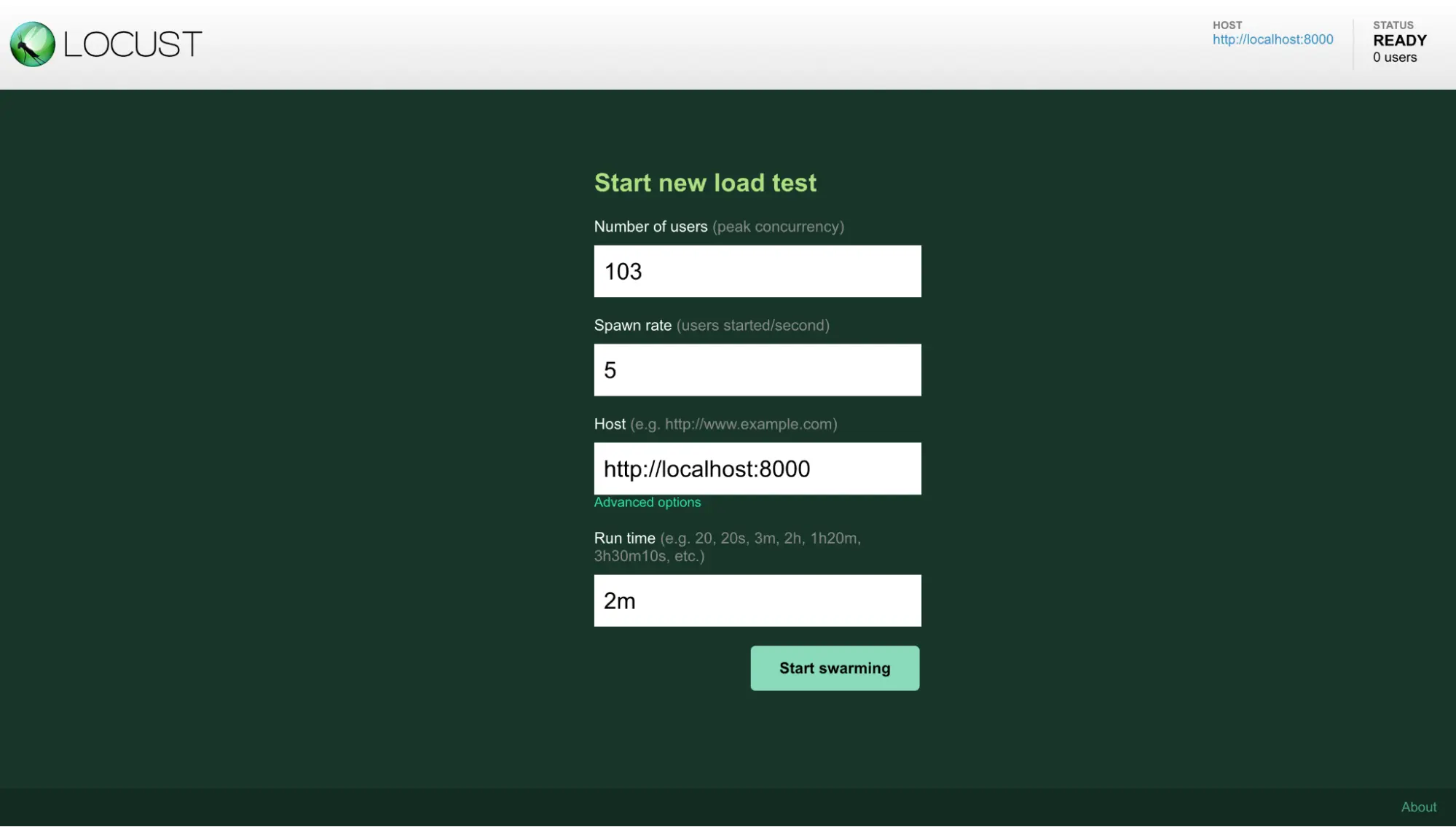

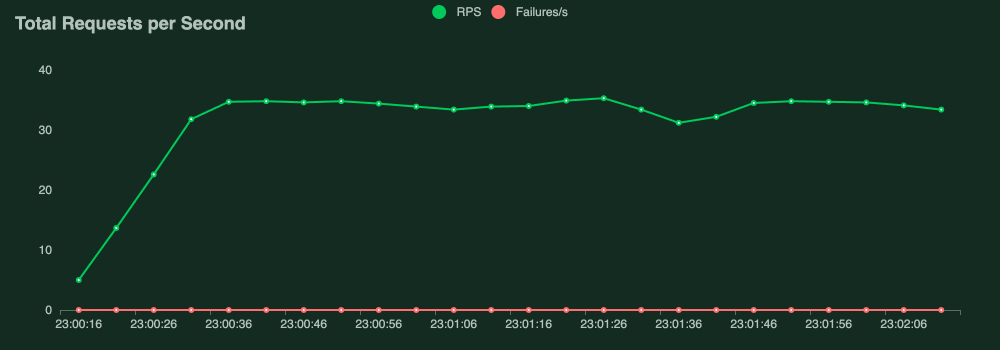

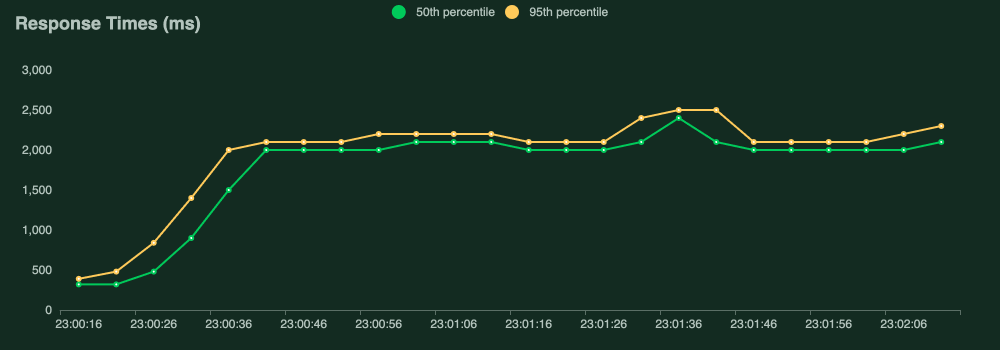

Lazy Load

Used configuration:

Results:

Performance test written using Locust:

from locust import HttpUser

from locust import between

from locust import task

class MyUser(HttpUser):

wait_time = between(0.8, 1.2) # Time between requests in seconds

@task(1)

def fun_fact_partial_load(self):

self.client.get("/fun-fact-partial-load")

@task(1)

def fun_fact_partial_load_count(self):

self.client.get("/fun-fact-partial-load-count")

@task(1)

def fun_fact_partial_load_footer(self):

self.client.get("/fun-fact-partial-load-footer")

@task(100)

def fun_fact_partial_load_data(self):

self.client.get("/fun-fact-partial-load-data")

Conclusions

The comparison between Lazy Load and Initial Load clearly demonstrates the superior efficiency of Lazy Load. The average execution time for Lazy Load is notably improved, showing a fourfold enhancement over the Initial Load approach. This efficiency extends further, enabling a higher Requests Per Second (RPS), signifying the application's capability to handle a greater volume of simultaneous requests.

E2E testing with Playwright:

Ensuring the seamless functionality and accurate presentation of elements within our application is paramount, and we achieve this through End-to-End (E2E) testing using Playwright.

In these carefully crafted tests, our focus is on validating the presence and proper functionality of critical elements, including navigation, engaging fact cards, and responsive buttons

Why does Playwright play well with HTMX?

Playwright stands out as a robust E2E testing tool due to its advantages. It supports multiple browser contexts, enabling parallel execution for faster test runs. Playwright works with major browsers, ensuring comprehensive cross-browser testing coverage, and excels in headless and mobile device testing. Additionally, Playwright supports scripting execution, allowing for more complex test scenarios and interactions.

Furthermore, Playwright provides the capability to check visual aspects of applications. With features like screenshot comparison and pixel-perfect testing, Playwright enables teams to ensure that the visual appearance of their applications remains consistent across different browsers and environments.

For example, while standard unit tests would require writing tests for each individual endpoint, Playwright enables easy testing of the entire flow, including navigation between pages, form submissions, and dynamic interactions. Moreover, Playwright is exceptionally user-friendly with an easy installation process, and for added convenience, there is an official Docker image available, streamlining the setup for testing.

Note: To execute the prepared E2E tests, follow these commands:

make e2e-tests-buildmake e2e-tests-bashmake e2e-tests

Before running E2E tests, install dependencies, set up the testing environment, and ensure the application is running locally or on the specified base URL. This rigorous E2E testing process, powered by Playwright, ensures a reliable and user-friendly application experience.

Boosting Links and Forms with HTMX

HTMX introduces a game-changer with its dynamic hx-boost attribute, reshaping how links and forms work in web applications. By adding hx-boost="true" to links and forms, HTMX brings a new level of responsiveness, using AJAX to quickly and efficiently update content.

Imagine a boosted link to a fictional about us page:

<a href="/about-us" hx-boost="true">About Us</a>

Instead of reloading the whole page, HTMX uses AJAX to request /about-us, skipping unnecessary processing linked to the head tag. The result? A faster user experience, especially for pages with lots of scripts and styles.

It’s also working for forms:

<form hx-boost="true" action="/example" method="post">

<input name="email" type="email" placeholder="Enter your email...">

<button>Submit</button>

</form>

This boosted form uses AJAX for a quick journey to /example, smoothly updating the body's content. Importantly, it keeps the navigation and history functions, just like traditional forms.

Progressive enhancement

HTMX embraces progressive enhancement through features like hx-boost, ensuring broad accessibility. With hx-boost, even if JavaScript is disabled, links and forms gracefully degrade, maintaining essential functionality. This approach enhances user experience for modern browsers while accommodating users without JavaScript, aligning with traditional HTML accessibility recommendations for a more inclusive web application.

Enhancing Navigation with hx-push-url

Discover the power of the hx-push-url attribute in HTMX, offering precise control over URL updates in the browser's location history.

When dealing with custom controls, such as a POST button, you may observe that the URL in the location bar remains static after the action. By incorporating hx-push-url="true" into your button declaration, you direct HTMX to update the location bar with the resulting URL, creating a new history entry for improved SEO visibility. This facilitates seamless navigation, allowing users to effortlessly traverse back and forth using the browser's navigation buttons.

<button hx-post="/users/{{ user.id }}/confirm"

hx-push-url="true"

hx-target="body">

Confirm User

</button>

Improving Content Updates with hx-target

In HTMX, when triggering requests, the default target is typically set to the same element where the triggering action occurs. However, there are scenarios where customization is beneficial, such as when updating input text dynamically based on user input events like change or keyup.

Let's explore a practical example involving a search form:

<form action="/data" method="get">

<label for="search">Search</label>

<input

id="search"

type="text"

name="search"

hx-get="/data"

hx-trigger="keyup delay:200ms changed"

hx-target="#search-results"

/>

</form>

<div id="search-results"></div>

Here we’re searching when someone is typing.

Advanced Content Extraction with hx-select

When dealing with complex responses or wanting to extract specific elements from a larger payload, the hx-select attribute in HTMX proves to be a valuable tool. This advanced feature allows you to precisely target and retrieve content based on identifiers, providing a streamlined approach to integrating reusable templates into your application.

Imagine a scenario where a package returns a fully rendered page, but your goal is to extract and display a new message delivered from the server.

<html>

<head>

</head>

<body>

<div id="login-container">

<p>Login to the application</p>

<form

hx-post="/login/"

hx-target="#login-container"

hx-select="#login-container"

>

<input type="text" name="login" />

<input type="password" name="password" />

</form>

</div>

</body>

</html>

In this example, the form submission (hx-post="/login/") triggers HTMX to extract and replace the content inside the element with the ID "#login-container" with the server's response. This sophisticated use of hx-select enables precise content extraction, facilitating dynamic and efficient updates within your application.

Enhance User Experience with Request Indicators

In the realm of asynchronous web applications, informing users about ongoing background processes becomes crucial. HTMX introduces the concept of request indicators, represented by the htmx-indicator class, to seamlessly communicate the occurrence of AJAX requests without interrupting the user flow.

By default, elements with the htmx-indicator class have zero opacity, rendering them invisible while present in the DOM. When an HTMX request is initiated, the htmx-request class is applied to a specified element, causing a child element with the htmx-indicator class to transition to an opacity of 1, making the indicator visible.

Consider the following example where a button click triggers an HTMX request, revealing a spinner GIF:

<button hx-get="/click">

Click Me!

<img class="htmx-indicator" src="/spinner.gif">

</button>

In this instance, when the button is clicked, the htmx-request class is added, making the spinner GIF visible and signaling an ongoing request.

Customizing the appearance of the indicator is flexible. You can utilize CSS transitions or create your own transition mechanism based on your application's requirements. For instance:

.htmx-indicator {

display: none;

}

.htmx-request .htmx-indicator {

display: inline;

}

.htmx-request.htmx-indicator {

display: inline;

}

If you wish to associate the htmx-request class with a different element, the hx-indicator attribute, coupled with a CSS selector, provides this flexibility. For instance:

<div>

<button hx-get="/click" hx-indicator="#indicator">

Click Me!

</button>

<img id="indicator" class="htmx-indicator" src="/spinner.gif"/>

</div>

Fine-Tuning Lazy Loading

One additional and highly beneficial feature provided by HTMX is lazy loading, available in various versions. The first and fundamental approach involves using hx-trigger="load". This instructs HTMX to trigger a request for data after the page has finished loading. Combining this with a loader can significantly enhance the user experience by visually indicating ongoing actions or providing information about the loading process.

<div hx-get="/path/to/content" hx-trigger="load">

<!-- Content loaded after the page has loaded -->

</div>

Another effective approach is to initiate data requests when an element becomes visible or is revealed within a certain viewport. HTMX offers two options for this: hx-trigger="revealed" and hx-trigger="intersect". For instance, using hx-trigger="revealed" allows us to load content as the user scrolls down to the element.

<div hx-get="/path/to/content" hx-trigger="revealed">

<!-- Content loaded when this element is revealed -->

</div>

On the other hand, hx-trigger="intersect threshold:0.5" enables us to load content when an element is partially visible, with the specified threshold (in this case, when 50% of the element becomes visible).

<div hx-get="/path/to/content" hx-trigger="intersect threshold:0.5">

<!-- Content loaded when at least 50% of this element is visible -->

</div>

This level of flexibility ensures that data is fetched precisely when needed, optimizing the overall performance and responsiveness of the web application.

Enhancing FastAPI Security with Templates

Discover the advanced security features embedded in FastAPI when incorporating templates, ranging from mitigating vulnerabilities to implementing robust data handling practices.

CSRF Token: Strengthening Your Defenses

When configuring your project, verifying the necessity of a CSRF Token is crucial. Leverage recent cookie enhancements by setting one cookie to "lax" and another to "strict." Always check for the existence of the "strict" cookie, particularly before executing database writes or other sensitive actions, as its absence indicates a potential CSRF threat. If a CSRF Token remains necessary, consider employing the lightweight package fastapi-csrf-protect for seamless Cross-Site Request Forgery (XSRF) protection support. If you would like to learn more about CSRF Token protection checkout this interesting series of articles.

Example login form:

from fastapi import FastAPI, Request, Depends

from fastapi.responses import JSONResponse

from fastapi.templating import Jinja2Templates

from fastapi_csrf_protect import CsrfProtect

from fastapi_csrf_protect.exceptions import CsrfProtectError

from pydantic import BaseModel

app = FastAPI()

templates = Jinja2Templates(directory="templates")

class CsrfSettings(BaseModel):

secret_key: str = "asecrettoeverybody"

cookie_samesite: str = "none"

@CsrfProtect.load_config

def get_csrf_config():

return CsrfSettings()

@app.get("/login")

def form(request: Request, csrf_protect: CsrfProtect = Depends()):

"""

Returns form template.

"""

csrf_token, signed_token = csrf_protect.generate_csrf_tokens()

response = templates.TemplateResponse("form.html", {"request": request, "csrf_token": csrf_token})

csrf_protect.set_csrf_cookie(signed_token, response)

return response

@app.post("/login", response_class=JSONResponse)

async def create_post(request: Request, csrf_protect: CsrfProtect = Depends()):

"""

Creates a new Post

"""

await csrf_protect.validate_csrf(request)

response: JSONResponse = JSONResponse(status_code=200, content={"detail": "OK"})

csrf_protect.unset_csrf_cookie(response) # prevent token reuse

return response

@app.exception_handler(CsrfProtectError)

def csrf_protect_exception_handler(request: Request, exc: CsrfProtectError):

return JSONResponse(status_code=exc.status_code, content={"detail": exc.message})

Github Source: aekasitt/fastapi-csrf-protect

Summary

In summary, we've gained significant insights into optimizing websites for better performance, speed, and security. FastAPI and HTMX emerge as a formidable duo for constructing modern web applications. Our exploration revealed that HTMX demonstrates impressive performance, particularly in content loading compared to conventional methods. Through rigorous testing, we've deciphered techniques for efficient and rapid content loading. Leveraging tools like Playwright and adhering to security best practices, we can craft websites that not only deliver swift performance but also prioritize user safety. This guide serves as a roadmap for developers aspiring to create exceptional websites that excel in functionality while safeguarding users' information. Feel free to reach out to our team if you have further questions or need guidance on any of these technologies. Let us help you succeed with your web development projects.

Download the repository and begin your next project today.